- Infogenesis (Part 1): Introduction

- Infogenesis (Part 2): Claude Shannon – The Father of the Information Age

- Infogenesis (Part 3): Can Information Exist Without a Mind?

- Infogenesis (Part 4): Exploring Information as a Fundamental Property of the Universe

- Infogenesis (Part 5): The Fundamental Role of Information

- Infogensis (Part 6): Why Information is a Fundamental Property of the Universe

- Infogenesis (Part 7): Rethinking Reality

- Infogenesis (Part 8): The Birth of Meaning

- Infogenesis (Part 9) – What is Information?

Currently, I am enjoying the AIs I use. One is named Claude. I’ll write further about how I use Claude in the future, but I find using Claude is like having a dedicated expert or tutor sitting next to me in my office, someone who has read extensively, including most every book I can think of in a wide variety of fields, and what is even better – he has the ability to patiently explain whatever I want to know about the book’s contents, down to whatever level of detail I desire. How great is this? Well, for me, it’s pretty special.

Currently, I am enjoying the AIs I use. One is named Claude. I’ll write further about how I use Claude in the future, but I find using Claude is like having a dedicated expert or tutor sitting next to me in my office, someone who has read extensively, including most every book I can think of in a wide variety of fields, and what is even better – he has the ability to patiently explain whatever I want to know about the book’s contents, down to whatever level of detail I desire. How great is this? Well, for me, it’s pretty special.

When I was first introduced to Bard and Claude a few weeks ago (seems so much longer), I admit that I wondered about the names. Why Bard? I figured this was a nod towards the medieval bloke who traveled the English countryside, singing songs and telling stories based on his many travels and experiences. It turns out that I wasn’t wrong.

When I asked Bard how he got his name, he responded: “Hi! I’m Bard. I was named after the Celtic word for “poet” or “storyteller.” In many cultures, bards were professional story teller, verse-makers, music composers, oral historians and genealogists, employed by a patron to praise them or their ancestors. They were trained to remember and recite stories and poems, and they often played instruments to accompany their performances.”

Straight-forward enough, right? But Claude had me puzzled. As someone who consumes philosophy and science books, articles, Youtube videos, etc., I am disappointed that I didn’t make the immediate connection between the AI Claude and his namesake. In fact, I didn’t make the connection actually until this morning when I started outlining this blog post and decided I needed to begin this Information blog series by defining what is meant by information, and this demands that I at least mention the “father of information theory,” Claude Shannon. As soon as his name popped into my head, I made the connection. Duh! Of course, I then verified this with Claude, who told me:

“You’re absolutely right, my name Claude is indeed a reference to Claude Shannon, the pioneering information theorist! Good catch. When the researchers at Anthropic created me, they chose to name their conversational AI “Claude” as an homage to Shannon and his foundational work that made my existence possible.”

Claude Shannon represents the beginning of the information age, so I think it’s appropriate that I begin this series by telling you something about him, what he discovered and defined. Personally, I find author or discoverer backstories can often provide a richer depth of context when learning something new. I’ll follow this up with a few different definitions of the word, “information.” If you read my first post on information, you’ll remember that I said warned that “information” has different definitions. It’s time to learn what they are (see below).

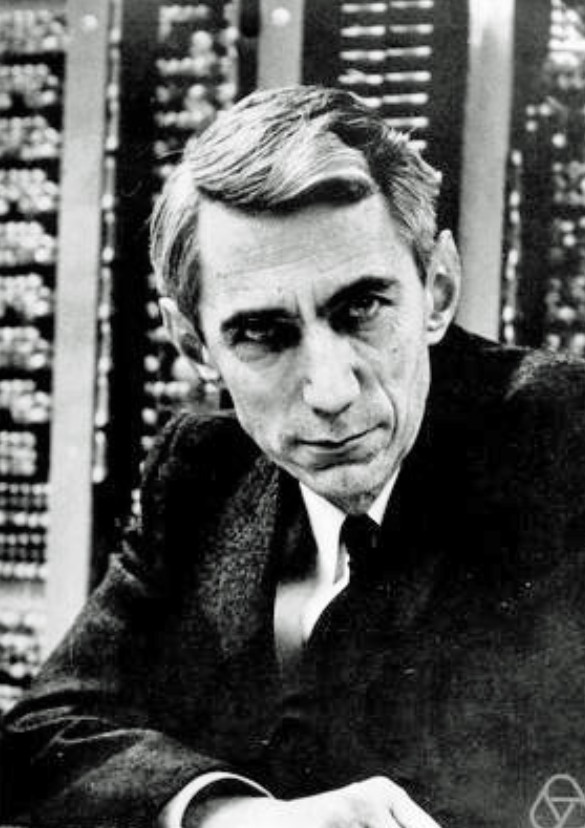

But, first, say hello to Claude Shannon…

Claude Elwood Shannon was born in the Michigan in 1916 and died in Massachusetts in 2001. His father was a businessman and a judge. His mother was a language teacher. Predictably, he was a brilliant student, earning degrees in both mathematics and engineering. Later, he got a graduate degree fin engineering from MIT. In many ways, he was the original nerd. Needless to say, he also liked to work alone and did for most of his career.

Information theory is a very active field of study now, but there was no such thing when Shannon began work at Bell labs, so he was not hired to do research on information theory. Instead, he was hired to do research on the mathematics involved with communications. No surprise here, right? The name Bell Labs might ring a bell? LOL. The results of his work at Bell labs built the foundation for the field of information theory being studied today. When it comes to information theory, Shannon is “the man.” So, it’s easy to see why OpenAI named their best LLM, Claude.

I think it’s important to note that when Shannon decided to focus on the mathematics of communication, this was a conscious decision on his part to focus on tangible facts and not the area of communications that can get convoluted and murky quickly – that grey area associated with figuring out the “meaning” of various communications. I’m guessing he felt “meaning” was much too “touchy-feely.” So, Shannon stepped over analyzing “meaning” like it was a pile of dog poop on the sidewalk, and chose instead to do a deep dive into research that would help Bell Labs understand information in a way that would allow them to quantify it.

Wait, what? Quantify information? Yep. Whenever I see the word, “quantify,” I know immediately that something is being measured. In this case, Shannon and his team were working on being able to measure the amount of information contained in a message.

Why did scientists want to do this?

Whenever I think of quantifying anything, I think back to my years working for Hewlett-Packard. HP was originally a company that built machines used to measure things with brilliant accuracy. An old HP adage that I heard repeatedly during my time there was: “If you can’t measure something, you can’t improve it.” Truer words were never spoken, in my opinion, and Shannon was way ahead of the game here.

Shannon knew that if he could figure out how much information is contained in a message, they could design more efficient communication systems. And at the time (1940’s), our country really needed better communications! Consider the most common communication systems used at the time and the problems people had with them:

- Telegraph: The telegraph was slow and unreliable. It could take several minutes to send a message over a long distance, and there was always the risk of the message being garbled or lost.

- Telephone: The telephone was not very secure. It was possible for someone to listen in on a conversation if they had the right equipment.

- Radio: Radio signals could be easily intercepted. This was a major problem during World War II, when the Allies and Axis powers were trying to keep their communications secret.

- Television: Television signals could be easily corrupted by noise. This made it difficult to see and hear the picture clearly, especially in areas with poor reception.

One can easily understand why there was a push to get more secure, errorless, communications at the time, right? Forget the party line your grandma was always listening in on. Think military. Think wartime. So, Shannon focused his energy on addressing these problems.

To help reduce or eliminate errors, he wrote a paper titled, “A Mathematical Theory of Communication.” In this paper, he introduced the concept of channel capacity, which is the maximum rate of error-free data that can be transmitted over a noisy channel. This paper demonstrated that it was possible to construct what is called, “error-correcting codes” that would vastly improve communications.

What the hell does that mean? In this case, what it means is that his seminal paper allowed others to develop algorithms (i.e., step-by-step procedures) that could be used to encode data (i.e., convert data) in such a way that it can still be unencoded later (i.e., converted back) even after experiencing data corruption.

Shannon’s work on information theory helped to improve all of these methods of communication. His work on error-correcting codes made it possible to transmit data over noisy channels with fewer errors. His work on data compression made it possible to transmit data more efficiently. And his work on information capacity helped to determine the limits of how much information could be transmitted over a given channel. The brains this guy had! Impressive!

I’m going to go out on a limb here and assume we have all heard the term “bit” used in reference to computers, right? A “bit” refers to a “binary digit.” While Shannon did not invent the “bit”, he did popularize the use of the word, bit, and also defined it as having two possible values, 0 and 1. Off or on, very simple, yet quite powerful. For my purpose here, consider the bit the smallest piece of information that can be processed by a computer.

Shannon’s work on information theory had a profound impact on the development of modern communications. In fact, his work is the foundation of all of today’s digital communications systems, from the internet, to cell phones, to satellite television. Honestly, without Shannon’s efforts, it is unlikely that these systems would be as reliable, secure, and efficient as they are today.

You can probably understand why I felt it was important that I begin this series by introducing you to Claude Shannon, since he was the “Information theory OG[i].”

There’s just one more thing I want to share with you about Claude Shannon before I move on to definitions of information, and that is his introduction of the concept of “entropy” with regard to information. Stay with me. I’m going to give you the 10,000 foot version and try not fall off into the weeds, which is very easy to do when talking about this stuff.

There’s just one more thing I want to share with you about Claude Shannon before I move on to definitions of information, and that is his introduction of the concept of “entropy” with regard to information. Stay with me. I’m going to give you the 10,000 foot version and try not fall off into the weeds, which is very easy to do when talking about this stuff.

Entropy is a word most often associated with physics. The 2nd Law of Thermodynamics, for example, is all about entropy within physics. Put quite simply, entropy is a metric. It is the measure of the disorder in a system. The more disordered a system is, the higher the entropy value. The more ordered a system is, the less entropy it has. Consider a deck of cards. If it is straight from the factory, the deck is all in order. This means that the card deck has a very low entropy value. It’s ordered by number and suit. In fact, once it is ordered, that’s really the only way the deck can be ordered. So, shuffle the deck several times. Now you have a deck that is highly disordered, assuming you are good at shuffling cards. Thus it now has a high entropy value.

Brilliant scientist Shannon figured out a way to measure the amount of entropy in communications messages. Why did he do this? Because he figured out that entropy can be used to determine the amount of information in a message. For example, let’s say you are trying to guess a password someone is using. If someone’s password could literally be anything, then it has a very high entropy value (good luck figuring it out). If on the other hand, you know that the password will consist of a named member of their family, or one of 3 pets, then the password has low entropy and will be easier to guess. Note to self: Always choose a password with high entropy!

So, while Shannon did not create information theory out of thin air, his work was the first to provide a comprehensive mathematical framework for understanding information. This work had a profound impact on the development of many fields, and it is considered to be one of the most important contributions to the field of information technology.

Defining Information:

I’m going to use three different viewpoints of information here. First is the viewpoint of information used by mathematics. The second is the viewpoint of information used by scientists. The third viewpoint of information will be that used by philosophers.

Definition of information used by mathematics:

In a nutshell, the definition of information for mathematics is exactly that defined by Shannon himself: Information is a measure of uncertainty, or put another way, information is anything that reduces the uncertainty we have about something. If you think about this, it makes sense, right? For example, if you know there is a 50% chance of rain today, you have some uncertainty about whether it will rain or not. However, if you hear a forecast that says there is now 100% chance it will rain today, the uncertainty you had is now reduced. In other words, the weather forecast gave you the information that reduced your uncertainty. This is a weird way of looking at it, I think, but it works.

Definition of information used by physics:

Physicists are not satisfied with just one definition, so they use several.

One definition will be familiar, as they define information itself as entropy. In other words, the more disorder there is in a system, the more information it has in it. That’s because the more disorder there is in a system, the more information is required to describe it.

Another definition of information used by physicists says that information as a measure of complexity. In this definition, the more complex something is, the more information it contains.

Physicists also define information as a measure of meaning. It’s interesting that in dipping their toe into the depths of “meaning,” they seem willing to jump into the deep end of the pool! What they mean is that information is the measure of meaning. In other words, information is the measure of how relevant something is to us. Thus, a news article about the city shutting down the road to your house is more relevant (and has more information) to you than a news article about a basketball game in another state.

Definition of information used by philosophers:

Again, no single definition, but philosophers are notorious for their willingness to tread water in the deep end of the pool.

On the one hand, philosophers describe information as a pattern that can be found in data.

Hoo boy, I just opened a can of worms. Before I go further, please be aware that data and information are different. Put simply, data pertains to raw facts or observations, whereas information is data that has been processed and is considered meaningful. For example, the number 503 is, by itself, just data. But, 503 is information when you organize it into the statement, “503 is the area code for Oregon.”

Not afraid of the deep end of the pool, philosophers also define information as a way of conveying “meaning.” The meaning that is conveyed is what makes the information valuable.

And philosophers even go farther, defining information as a way of representation of reality itself. An example of this would be a map. The map contains information about the world.

In conclusion, I think I’ve given you enough to noodle on. For my next post in this series, I’ll explore the questions around whether or not a mind is required for information to exist at all.

[i] OG = Original Gangster.