Exploring the Capabilities and Types of Artificial Intelligence

The advent of artificial intelligence (AI) is upon us. Since 2017, with the introduction to the world of a technology type known as a “Transformer,” and the subsequent introduction to the world of large language models (LLMs), AI development has taken off on a very steep trajectory. It is now being discussed in schools, hospitals, businesses, the government, by the military, by folks in the coffee shop. (And, believe it or not, there is a scientific answer as to why the development trajectory has been so steep. More on this in a future post!)

Here then are some ways of looking at artificial intelligence that I think you all should be made aware of:

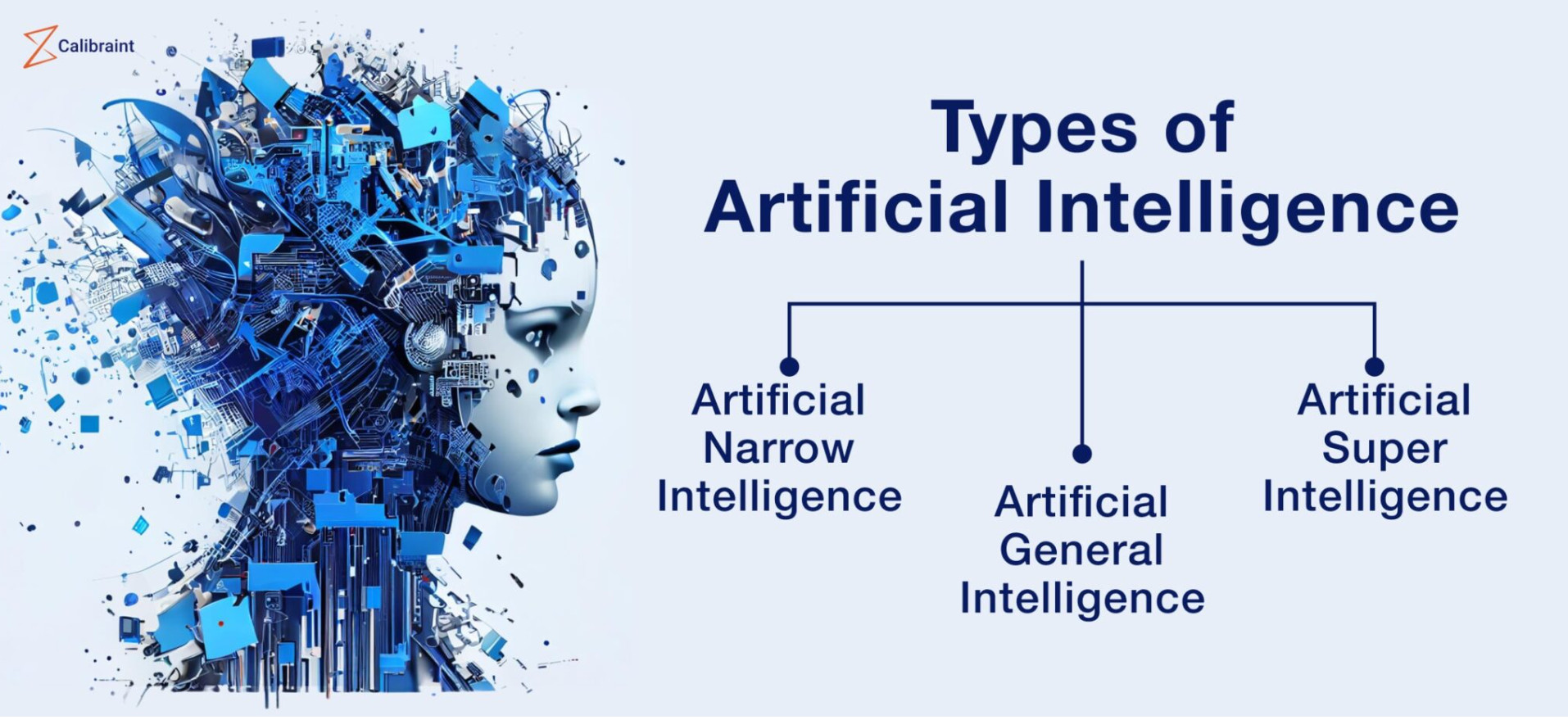

Types of AI:

The types of AI are easy to remember. The types are: weak, strong, and super!

“Weak AI,” a.k.a. “Narrow AI”

Weak AI refers to an AI that has been trained to do just a very specific task, call it a “narrow task,” if you will, typically something a human cannot do as well as an AI. Thus, it is also sometimes called, “Narrow AI.” We use this type of AI all the time!

A great example of Narrow AI is one you’ve probably heard about. In the world of gaming, DeepMind’s[i] AlphaGo is a good example of how AI can master complex games that require depth and foresight. Go is an ancient Chinese strategy game known for its vast number of potential moves and strategy. It was once considered a very challenging frontier for AI, even more so than chess. Yet, AlphaGo, a Narrow AI, not only learned how to play the game on its own, it defeated the world champion (who said he would never play again after he had to resign in 3 out of 4 games.)

Other examples of weak AI are:

- Speech recognition software

- Chatbots

- Image recognition systems

- Voice assistants, such as Siri, Alexa, and Google Assistant

- Recommendation systems

- Data mining and analysis

- Language translation

- Playing games

“Strong AI” or “AGI” (General Artificial Intelligence)

We’re hearing a lot about AGI right now in the news and I think it’s safe to say that “AGI” is now the preferred name used by people in the field for this type of AI, rather than, “strong AI.” Please remember that no AGI has been realized yet! We’re being told that it is on its way, but it is not a real thing yet! We can expect to hear more buzz about the AGI the closer we get to it.

Great, so what is the AGI supposed to be like once it arrives? Well, the AGI is a hypothetical form of artificial intelligence that can learn and think just like a human – make that better than a human! We will not be able to tell the difference! In fact, the AGI will be smarter than any human in history and will be capable of performing any intellectual task that a human can and will do it better. The AGI will have a deep understanding of the world and how everything works, because it has been trained on damn near everything.

It is expected that the AGI will be self-aware, some will argue “conscious,” which raises potential ethical concerns. Whether or not it’s conscious, we’ll have to decide, but in the meantime the AGI will be capable of adapting to different environments on its own and will be the smartest “thing” on the planet, capable of solving problems that are much harder, if not impossible, for humans to solve. I like to refer to it as an “entity,” because that is what it will be. For example, an AGI could teach itself to read an x-ray, analyze a patient’s medical history, and make a diagnosis. Imagine taking something this intelligent and focusing it on solving environmental problems, energy problems, etc. Scientist I.J. Good once said that the AGI (he called it an “ultra-intelligent machine”) will be the last machine humans ever need to make. He may be right! Once an AGI can replicate and fix itself, and – improve itself! Well, then we’re off to the races, folks. This will be a major historic disruptive event in human history, because afterwards nothing will be the same.

Fascinating! And still speculative. Note that an AGI is currently only theoretical. It ain’t here… yet! But, it is coming! When will it arrive? I’ll talk about those predictions in another post. I say this, even though OpenAI is leaking that their AI is starting to act like an AGI already.

Also note that it isn’t as if there will be one AGI that arrives, although they have to start someplace (I’m guessing OpenAI or Google). There will be many! They will be focused and trained in different fields. You could have multiple AGIs focused on different aspects of the health industry, finding cures for illnesses that are beyond us today. You could have AGIs focused on environmental science, finding solutions to the climate change problems plaguing us today.

The beauty of an AGI is that it can use previous learnings and skills to accomplish new tasks in a different context, without the need for humans to train the underlying models! If an AGI wants to learn how to perform a new task, it will figure it out by itself. This may sound a bit disconcerting. But, don’t get your panties in a bunch, I haven’t even discussed the third type of AI capability yet, and that’s the “super AI.”

Strong AI will play a big part in:

- Cybersecurity.

- Self-driving cars.

- Entertainment.

- Behavioral recognition and prediction.

- Language processing.

- Everything!

“Super AI” (Singularity)

[Sometimes called artificial superintelligence, or ASI] A Super AI will think, reason, learn, make judgements, and possess cognitive abilities that surpass us humans. A Super AI will have evolved beyond the point of catering to human sentiments and experiences, and will be able to feel emotions, needs and desires of its own.

The Singularity is what most people associate with SkyNet from the movies. Scientists aren’t sure the Singularity will come from an advancement of just computer power or learning, or through some other means, for example a breakthrough in nanotechnology, or some combination thereof! Time will tell.

What’s it all mean?

We are fortunate that we have a bit of an advantage here, folks, in that we can watch the evolution of an entirely new technology happen before our eyes. So often, we find out about such things after they’ve already arrived (e.g., the internet), leaving people wondering, “What the hell happened?” Of course, if you sleep through this evolution, then you’re going to miss it, but it will still affect you. And that would be sad. Because you may just wake up one day to discover that your entire life as you know it has changed as a result of this technology and will never be the same. I guess this could be more of an, “Oh shit!” moment. Who wants one of those?

While you don’t have to pursue it doggedly as some of us choose to, I recommend that you at least periodically read about it, or listen to a Youtube video, or pay attention when it’s in the news, so that you are not caught completely unaware or worse, blind-sided, when a new type of machine intelligence arrives.

Companies like OpenAI and Google have recently announced they are actively changing their business models and even the way they are organized internally, to address the coming arrival of artificial general intelligence (AGI), which they see happening with the next couple years. In fact, they are making it intentionally difficult for their employees to know everything that is going on!

[ For example, Google recently reorganized its AI divisions under a new entity called Alphabet AI, led by Jeff Dean. This move aims to centralize AI research and development across various Google products and services, preventing any single team or individual from holding sole control over potentially powerful AGI (artificial general intelligence) advancements. In other venues, like military intelligence, this would be called “compartmentalization.” It’s a smooth move and speaks to the seriousness of Google’s belief that AGI is not far off. (see below for discussion of AGI, etc.). ]

[ OpenAI is also getting their ducks in a row by jumping head-first into the brand-spanking new realm of AGI Safety Research. Here a significant portion of OpenAI’s research focuses on safety measures for AGI, including alignment research and developing techniques to ensure AI systems remain compatible with human values and goals. “Alignment” is an important concept and feature all of us will want in an AGI. It simply means that our human ambitions, goals, values, etc. are “aligned” with the AGI, so what we want, he wants and won’t go off half-cocked somewhere and do something terrible. ]

You need to see their videos and interviews to get a sense for just how serious Google and OpenAI are about these efforts. They are the ones calling for regulations, which is a bit of a first, right? They know what they’ve got behind closed doors and we don’t, not really, not yet. To hear these folks telling everyone, “Brace yourselves! The AGI is coming,” is a message I think we should listen to and start preparing for.

I’ll be the first to say that it can be confusing. It’s good to remember, as you read through the information in this post, which AIs are here today and which ones are still theory! (I’ll blow your minds later with another post talking about just how fast the field of AI is advancing.)

So, I hope I helped demystify AI just a bit for you, identifying the different types of AIs and understanding how they will work. Think of this post as a preliminary roadmap, one that will help you decipher the headlines, identify curious conversations, and maybe even peak your interest enough to start paying attention. So, buckle up, grab your virtual thinking cap, and prepare to journey into the fascinating world of artificial intelligence!

[i] Google DeepMind is a British-American subsidiary of Google that specializes in artificial intelligence (AI), machine learning, and neuroscience research. The company was founded in 2010 and is a division of Alphabet, Inc..

Jeff, really like your sharing your insights about AI and agree that AI can be used to accomplish great things. But I am very worried about it’s dangers. While ChatGPT’s “creation” of a new uncle for me and Copilot creating a new family business (but at least Copilot gives links to where it got the false info) are somewhat amusing, the ability for it to be used to create totally fake images and

voices, etc, is terrifying: NOBODY will be able to separate fact from fiction. Who can be trusted?

It’s already almost impossible figure out if anything online is a scam or not.

Hi Gordy!

Thanks so much for reading my blog – and for actually responding. I love it!

I, too, am concerned about the dangers of AI. I am working on another blog right now having to do with AI predictions for the future. In this I hope to explore the darker side of AI. I fear some who know what AI can do that is positive, are just a bit too optimistic in their outlook. I’m curious how ChatGPT has been “a new uncle” for you? Also, what’s this about Copilot creating a new family business? Tell me more please!

Yes, the fear of fake information is very real and I share your concern! The most we can hope to do is stay informed, at least for now.

P.S. Please let me know if you get this reply. I rarely get any comments, so it is good to know that the comment system is working.

Jeff

During WW2, my Uncle Gottfried Voltz worked for Messerschmitt and he was one of the pilots that would inspect and fly new ME262’s when they came off the assembly line.

When I did a “Voltz and ME262” query, ChatGPT

combined the birthplace and history of Gottfried with another ME262 pilot

and in effect “created” a new uncle – Helmut Voltz -which none of us had ever heard about. Bard, for it’s part, said that the “Voltz factory” made the jet engines for the plane. That’s news to us.

As far as dangers of AI, I just don’t see that there is any way to control or stop it from use by bad actors and will shortly lead to total collapse of anything done online: Nothing can be trusted because there’s

no way to distinguish the real from the fake.

[…] mean, then you should read these posts by me: “The Rise of Super-intelligence,” “Navigating the AI Landscape,” in order to better understand the implications presented within the post […]

[…] You’ve all been listening to me talk about AI and specifically, LLMs (large language models), for some time now. If you are new to AI, you can catch up on some of the terminology by reading these: “The Rise of Super-intelligence,” and “Navigating the AI Landscape.” […]

[…] might be you, then you should read these posts by me: “The Rise of Super-intelligence,” “Navigating the AI Landscape,” in order to better understand the implications presented within the post […]